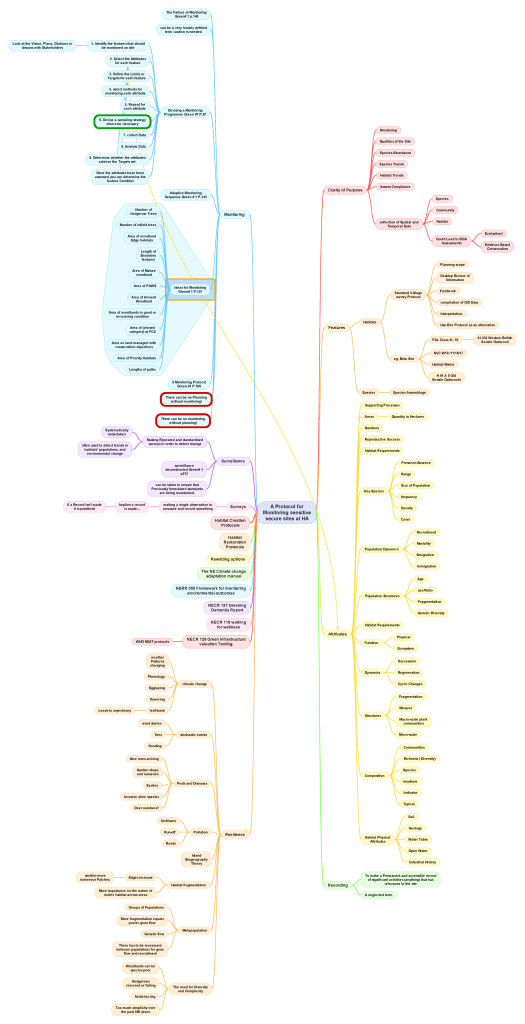

This is my protocol from my civil service days. I used this (in whole or part) to educate, plan, or review monitoring programmes. Monitoring is hard to plan and very hard to do. In the UK, funding is often rewarded for a ‘project’ but the concept of understanding whole life costs is rarely appreciated. The money wasted on community lottery funding schemes is heartbreaking as projects are devised, money spent, and then there is no conception of maintenance or longer-term costs. Locally, I know of over £3m in lottery funding that has been wasted in ill conceived schemes with no oversight and unqualified charities signing cheques with no regard for quality or care.

As a Gateway Reviewer I was often brought in too late in a failing scheme, and my role then was merely to record the errors and failures. If we had been brought in at strategic planning or business case, it was better.

This plan was my basic script for programme oversight and I found I could frequently reverse engineer it to interpret what had gone wrong or explain how a programme failed.

I experienced three types of monitoring:

- Curiosity or passive monitoring. As I often undertake on Cilfái, and became the output in Cilfái: Woodland Management and Climate Change.

- Mandated monitoring. Imposing a requirement on a programme as part of their funding and appraisal system.

- Question-driven monitoring. The dreaded ‘research question’ approach of many academic bodies, often a waste of talent and time and merely to get a tick in a box. This is the stuff that is funded, completed, and locked away for ever. The best use of resources is through good quality research questions. However, I used to read questions that were long and incomprehensible…so how do you answer them…or better yet learn from them?

As a reviewer for government, I saw monitoring programmes that were ineffective or failed completely. It doesn’t help that many in the biodiversity sector see monitoring as a ‘management activity’ which is unrelated to scientific research.

The failures I saw

- Short-term funding preventing planning. Question-driven monitoring pushed staff to plan backwards…data first, question later. Also, data management was often dreadful with no structure or futureproofing for reviewers. A monitoring timescale can be a decade…who stays around for ten years on a program in biodiversity? Nobody…which makes succession planning vital, but never done. Loss of key personnel and the corporate amnesia that follows was harmful for staff and quality, but often treasured by politicians and managers who want a predecessor to own mistakes.

- The Shopping List approach. An impossibly long list of monitoring topics instead of a sharp defined monitoring objective. It is backlog planning in programme speak.

- Failure to agree on what to monitor. Often politicians and managers want career-enhancing metrics…not realities.

- Flawed assumptions. A very human desire to compare one set of data to something elso or associate with something else that looks good, but has incompatible data. The temptation to ‘salt’ data on key metrics (e.g. bats, reptiles, invasive speciers) was overpowering. An overclaim is more likely to get published etc.

It is a big graphic, so you may need to enlarge it.